IBM i journaling is a built-in optional feature of the operating system designed to track any change to a given object. For example, a library, DB2 database, data queues, IFS (integrated file system), etc. The purpose of journaling is to be able to recover the object in the event of failure. Customers must create a journal for any given object to start journaling. Once created, any given change (write, update, delete, etc.) is first written to a journal receiver associated with the journal and then the change is made to the object. In case of a system crash, the last save of the object is restored and then the journal entries are applied. While journaling does address a system outage or crash situation, it does have a performance impact by forcing the job(s) to wait on updates to the object’s journal receiver and then the object itself. The most noticeable impact is the run-time of batch jobs.

There are 3 types of journals: Local, Remote and System Audit. The local journal process is mentioned above. Remote journals are used in High Availability (HA) and/or Disaster Recovery (DR) applications. In addition to writing an update to an object to the local journal receiver, the update is also written to the remote journal receiver. This remote update can be done synchronously or asynchronously. If the update to the remote journal receiver is done synchronously, the job has to wait until the write is complete on the remote system. This adds further latency to any updates. To reduce the added latency, the customer can configure the remote journal for asynchronous transfer. However, in the event of a failure on the source system, the remote journal receiver might be missing the last transfer. System Audit journals are used to audit access and/or updates to any given object.

Most IBM i software replication solutions rely on the system function of remote journaling. Some of these solutions include Mimix, Maxava, Robot HA, iTera, Quick EDD, etc. IBM i journal records all the adds, updates, or deletions to the DB2 database, any changes to data areas or data queues, and changes to the IFS.

The remote journal function copies any change to the journals to a remote system for HA and DR purposes. While this does address the HA/DR function, it can impact the run time of tasks or batch jobs. This is due to the job having to wait until the write or update is successful on the remote journal. Journal caching can help solve this issue.

To address the performance issue, you can install Option 42 of the IBM i operating system – HA Journal Performance (Journal caching). Journal caching used to be a chargeable feature but as of June 2002, it is free. It is distributed with the IBM i version V7R5, but will have to be downloaded and installed on version V7R4 and V7R3. This feature improves performance by updating the journals in memory and only physically writing them to disk in groups, thereby drastically reducing the write IO wait time.

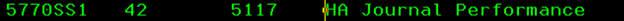

You can use the WRKSFWRSC command to check if Option 42 is installed.

After you have installed Option 42, you can specify it with the JRNCACHE parameter on the Create Journal (CRTJRN) or Change Journal (CHGJRN) commands. The IBM Navigator for i equivalent function is the Cache journal entries option on the Create Journal and Journal Properties dialogues.

Journal Caching can be tuned with the Change Journal Attributes (CHGJRNA) command to set the maximum time that the system waits before writing journal entries to disk when journal caching is used. Setting the CACHEWAIT time limits the loss of lingering changes when there is a lull in journal entry arrival.

It is not recommended to use journal caching if it is unacceptable to lose even one recent change in the event of a system failure where the contents of the main memory are not preserved. This type of journaling is directed primarily toward batch jobs and may not be suitable for interactive applications where single-system recovery is the primary reason for using journaling

While journal caching can drastically improve the run time of any job updating DB2 objects or IFS directories, modification to your backup and recovery run book might have to be modified. The best practice to quickly recover from a system failure with journal caching for the batch job(s) is to create a save object(s) before the batch job is run and then after the job(s). With software or hardware replication, the before-save file is stored on the target system. In the event of a source system crash, the recovery on the target system would be to restore before saving the file(s) and rerunning the batch job. As noted above, recent interactive transactions will be lost and will have to be re-entered.

Connect with the author: Randy Watson – Cloud Solutions Architect at Skytap

Randy Watson – Cloud Solutions Architect at Skytap