It takes light about eight minutes to travel from the Sun to Earth. If you step on a thorn with bare feet, it might take about half a second for the pain signal to reach your brain. Those are examples of latency. It’s the delay between endpoints or actions. In some cases, it’s not important. In other situations, slight delays mean everything. When you step on the car brake pedal, you expect the car to slow down immediately!

There are many forms of latency. Usually, it is thought of in terms of technology like network, database, disk, and application latency. For this article, let’s focus on network latency and its potential impact on cloud migrations involving mixed architectures like IBM Power AIX and traditional x86 servers. Both are some of the most popular compute architectures found in on-prem data centers today that are being migrated to the cloud.

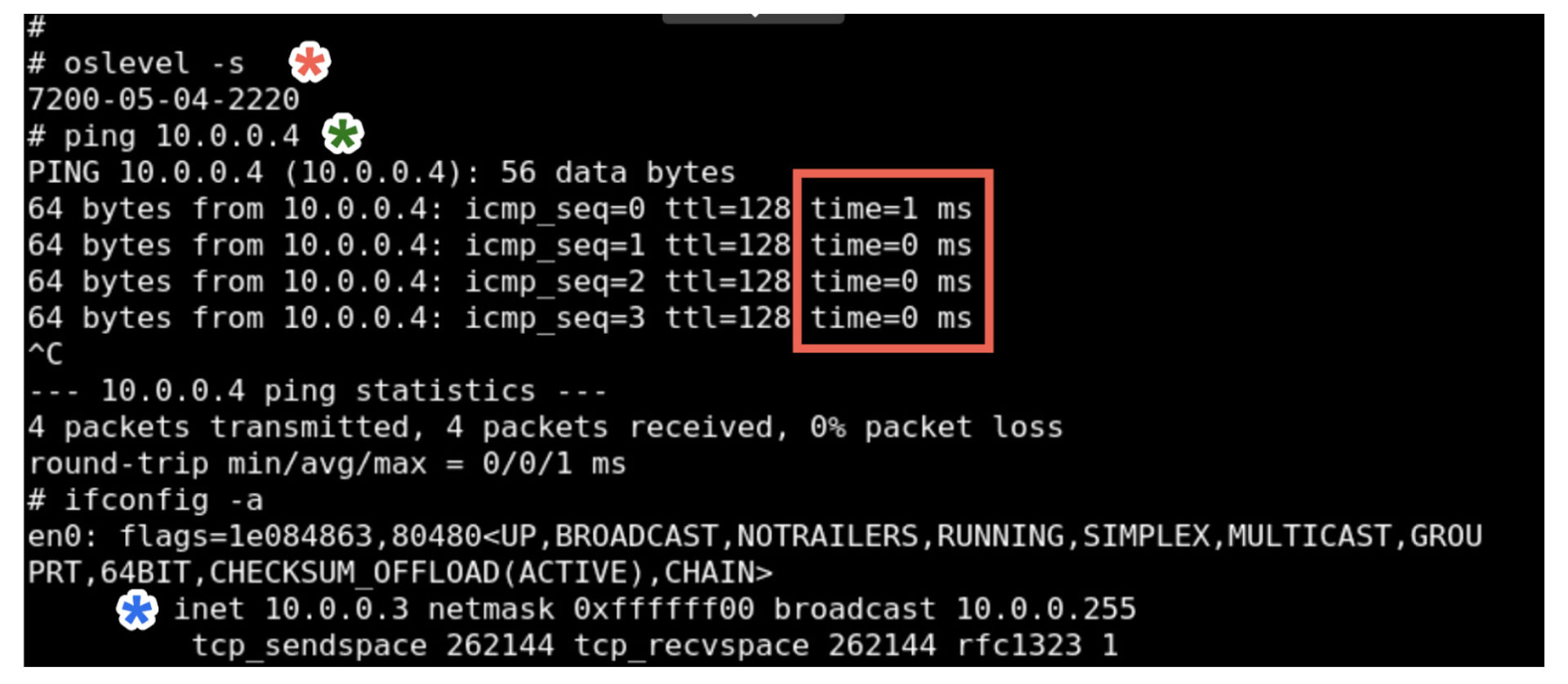

Look at this screenshot. It shows the latency, or more simply, the time to ping from an IBM AIX Power LPAR to an Intel x86 VM (cross-architecture, but on the same local network).

AIX 7.2 with IP Address: 10.0.0.3

Pinging 10.0.0.4, which is an Intel x86 VM running Linux.

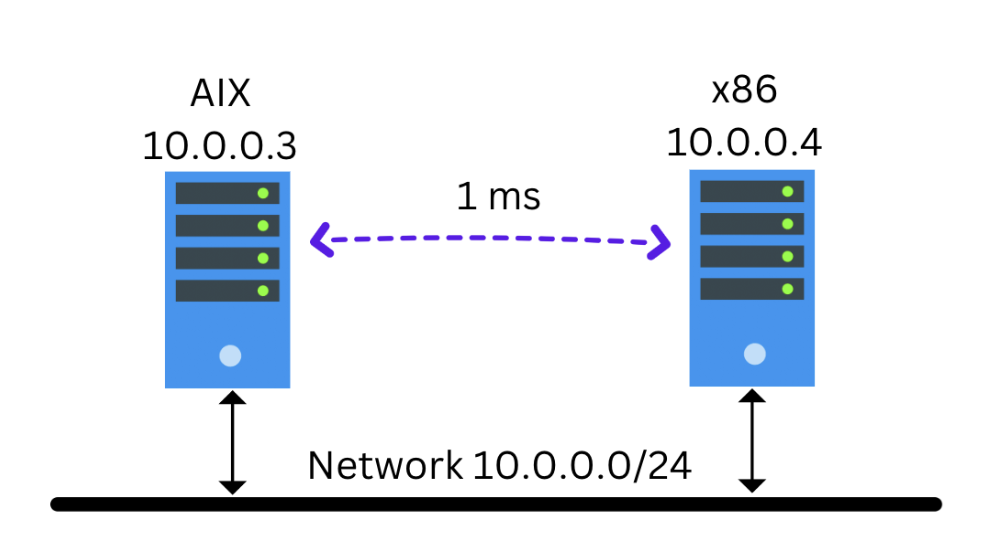

Anything less than 1, and the ping command reports “0”, so the ping time from 10.0.0.3 (AIX) to 10.0.0.4 (Intel x86) is 1 ms (millisecond) or less. The LPAR and the x86 server are on the same logical network (10.0.0.x).

The result is very good, but why is low latency important? Especially network latency when crossing computing architectures like in our example above, going from IBM Power running AIX to Intel x86 running Linux.

A popular IT industry trend is migrating midrange systems running IBM AIX or IBM i to the cloud. The goal is that you want to empty the data center so it can be shut down to save costs. Many organizations are doing this, and it is a viable IT strategy to move legacy/specialty applications to the cloud. The migration is typically done with a “lift and shift” approach without changing the original applications. Sometimes, without changing the IP addresses or hostnames of the original on-prem legacy systems. But there is one scenario where extreme caution is warranted. And that is where latency comes into play.

Many examples of legacy applications require very low latency to operate correctly. Traditional specialty applications that do file locking or record locking require very low latency when multiple users access the same data. Financial apps that do complex multi-step transactions require a specific latency to coordinate all the components. Real-time sensors or equipment running in a manufacturing plant might be sensitive to particular latencies in the messages they send and receive. Longer latency also means a system tuned to process a certain number of transactions will operate differently if the latency increases.

Many mature on-prem applications have specific latency requirements between the core components of their critical applications. Components like IBM Power, Intel x86, and other specialty compute architectures all logically together make up “the application.” To migrate that entire system to the cloud implies that you also must be able to deliver on the same latency expectations that existed on-prem. If those slip, then the application might not work or behave in the same stable way as it did on-prem.

Your preferred cloud vendor must be able to deliver the same types of latency that you have achieved over years of tuning on-prem. If your applications are made up of both IBM Power and x86, and these components talk to each other, then your cloud vendor must have both of these architectures “close enough” together to meet your original latency requirements.

Some companies that offer cloud services often have x86 and Power “split” into two completely separate offerings, and they are not tightly integrated at a physical level. It could be that x86 might be in a co-location (colo) that is separate from their IBM Power offering. In this case, the latency between the two might be larger than what your applications can tolerate. This would be an important technical qualification point to investigate during your cloud migration. If you don’t do this, you might expend a lot of resources performing a cloud migration to find out you can’t hit your latency requirements. If this happens, you will be painted into a corner with few alternatives other than to step in the wet paint. This will be messy and take time and money to fix.

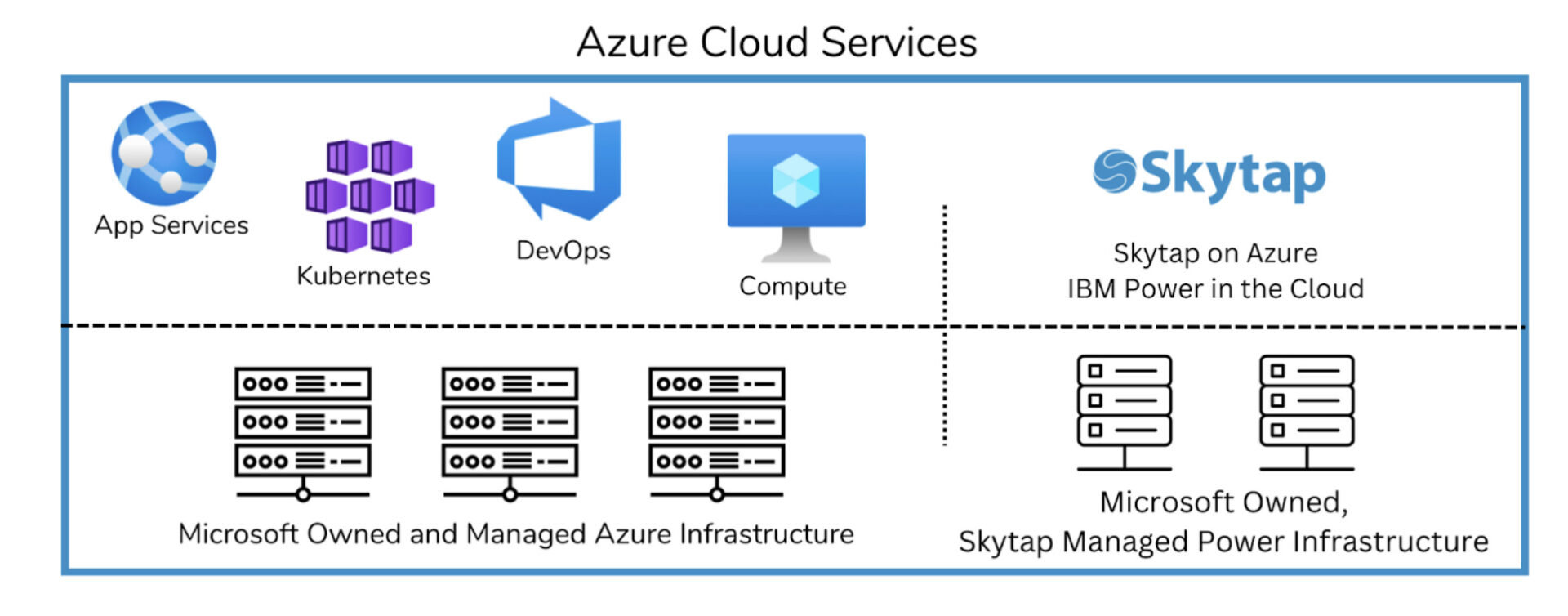

Let’s see how this can be solved in Azure. With Azure, you can run both cloud-native x86 virtual machines as well as IBM Power LPARs running AIX, IBM i, and Linux. The high-level architecture of Azure supporting both x86 and IBM Power looks like this:

By having both IBM Power and x86 very close together in the same region, the ability to achieve low latency between components becomes a reality.

What if you need “extremely low latency” down in the < 5ms range between components like IBM Power and x86, all running in Azure? Are you painted into a corner?

No, you are not. There is an Azure solution to this difficult low-latency problem.

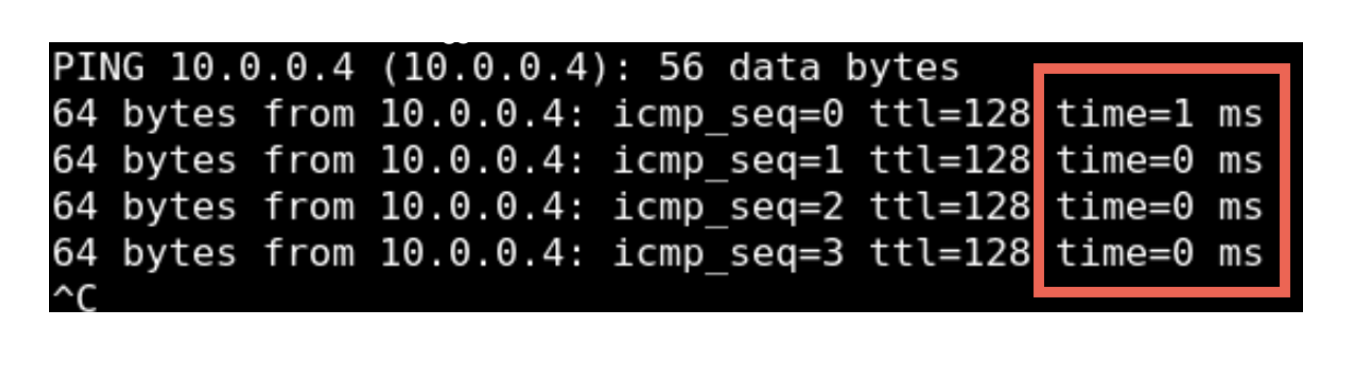

Let’s look at the original screenshot again of our latency test. The test measures the latency from an IBM Power LPAR running AIX to an auxiliary x86 VM that makes up part of the overall application ecosystem:

This result was actually achieved in Azure! It was not taken from on-prem. It shows that between an IBM Power LPAR running AIX and an auxiliary x86 VM connected to the same subnet, the latency between those two servers is as good as it can possibly get. The result is as good or better than what can be typically delivered from on-prem.

How is this done?

There is an optimized configuration possible in Skytap on Azure that allows for certain x86 VMs to be located “very close” to IBM Power LPARs. Instead of the x86 VMs running as Azure native VMs, they actually run “inside” of the Skytap on Azure service. This provides additional options for hosting auxiliary x86 servers that require very low latency when communicating to IBM Power LPARs. Because of this unique ability in Skytap, workloads that have very low latency requirements can be met.

To summarize:

- Azure provides two standard options for hosting x86 servers:

- as Azure native VMs

- as part of the Azure VMware Solution

- Skytap on Azure allows IBM Power to also run in the Azure data center

- Skytap on Azure supports the ability to host x86 VMs in close proximity to IBM Power to achieve ultra-low latency. This ability provides a third Azure x86 hosting option when very low latency is important.

With Skytap on Azure, you won’t get painted into a latency corner. When migrating to the cloud, make sure you are considering what the network latency requirements are between all of the application components, both Power and x86. Pick a cloud vendor that supports those requirements.